Proton Lumo is a privacy-first AI assistant that stores your chat history with zero-access encryption and keeps no server-side logs.

Messages are encrypted on your device, decrypted only inside Proton’s GPU environment for processing, then re-encrypted for return—what Proton calls “user-to-Lumo” (U2L) encryption, not traditional end-to-end.

Your chats aren’t used to train models, and Proton says it doesn’t send prompts to third parties or partners; it runs open-source models in European data centers.

Independent testing confirms bidirectional client-side encryption and notes the practical trade-off: context must be resent each turn, and messages are decrypted for processing.

Lumo 1.1 improves speed, reasoning, coding, and web search while keeping the same privacy guarantees.

What is Proton Lumo?

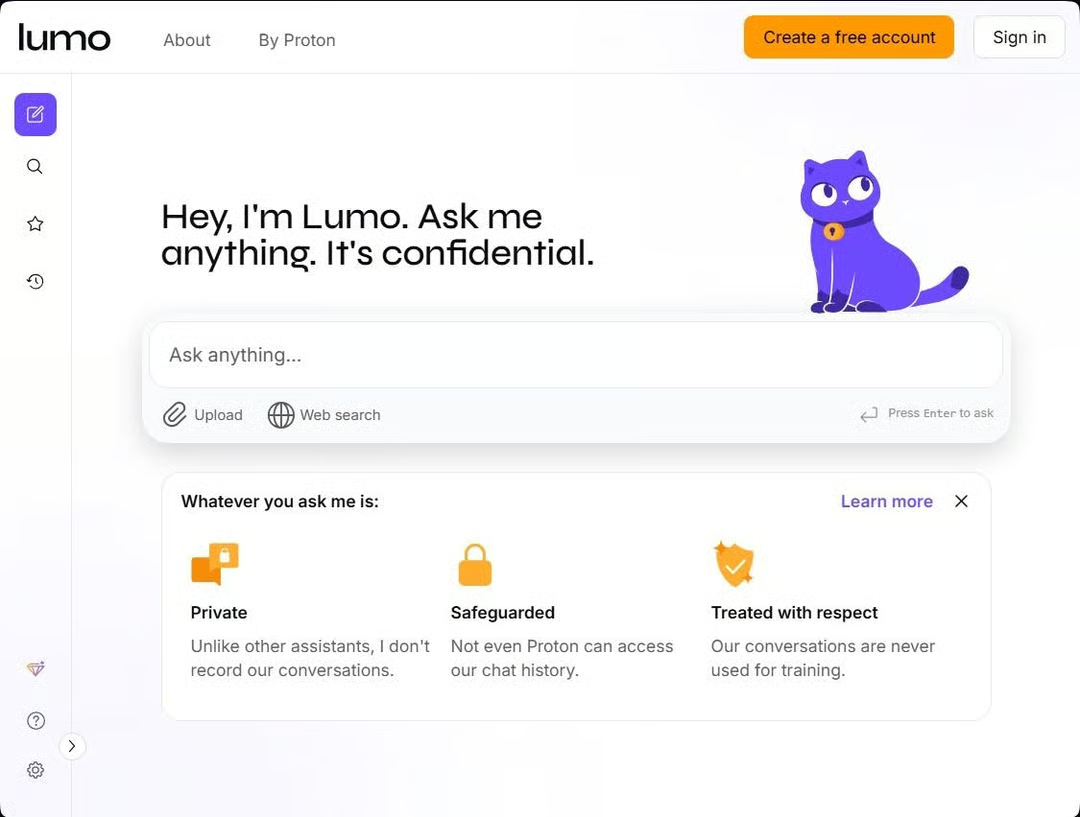

Lumo is Proton’s take on a private AI assistant—a tool you can ask to summarize documents, draft emails, write code, or research topics without handing your data to an ad-driven platform. It’s part of the broader Proton ecosystem (Mail, VPN, Drive, Pass, etc.) and is usable for free—no account required—via web and mobile apps.

Where it tries to stand out is the privacy model. Proton promises no server-side logs, zero-access encryption for saved chats, and a firm “not used to train AI” stance—plus an architecture designed to avoid data sharing with third parties.

Privacy model, explained simply

Zero-access encryption (storage)

When you save a conversation, it’s protected with zero-access encryption—meaning Proton’s servers can’t read your chat history. Only your device can decrypt it. This mirrors the encryption approach used across Proton’s other products.

User-to-Lumo encryption (in transit)

Traditional end-to-end encryption (E2EE) isn’t practical for AI because models must see your text to generate a response. Proton acknowledges this and instead implements what it calls “user-to-Lumo (U2L) encryption.” In brief:

- Your device generates a per-request symmetric key (AES) and encrypts your prompt.

- That key is encrypted with Lumo’s public PGP key so only Lumo’s GPU servers can decrypt it.

- The server processes the request, then encrypts the response before sending it back.

Proton explicitly notes this isn’t “regular” E2EE and explains why fully homomorphic encryption (which would keep data encrypted even during processing) is currently far too slow for real-world use.

No logs, no model training, no third parties

Proton says Lumo does not keep server-side logs of your conversations, and saved chats are accessible only to you via zero-access encryption.

Your prompts are not used to train the model.

Proton states Lumo uses open-source models hosted in European data centers and does not send queries to third-party providers.

Real-world implications and trade-offs

Independent testing supports Proton’s claims while highlighting key nuances:

- Bidirectional client-side encryption was observed in request/response payloads (“encrypted: true”), providing an extra layer on top of HTTPS.

- Because Lumo’s servers keep no logs and are “memoryless,” the conversation context is resent (encrypted) with each turn. That’s normal for LLMs but worth noting from a privacy and performance perspective.

- Messages must be decrypted inside Proton’s secure GPU environment to be processed. If your threat model assumes a powerful adversary capable of compelled real-time capture, running a local model may still be preferable.

Bottom line: Lumo meaningfully improves on the status quo for cloud AI privacy, but it’s not the same as classic human-to-human E2EE. That distinction matters most for users with extreme sensitivity requirements.

Features and performance

- Ghost mode: If you’re signed in, chats are normally saved with zero-access encryption. Toggle Ghost Mode to have the current conversation disappear after you close it.

- Web search: Lumo can pull in current information when you ask it to, reducing hallucinations on recent topics.

- File uploads and Proton Drive integration: Analyze documents (including sensitive ones) without creating persistent server logs; attach end-to-end encrypted files from Proton Drive.

- Open source apps and models: Proton emphasizes transparency. The web client and mobile apps are open source, and Lumo runs open-source models.

Performance got a lift in Lumo 1.1, with improvements in context handling, code generation, planning/reasoning, and web search quality. Proton reports fewer hallucinations and more reliable multi-step results, especially with the optional Lumo Plus tier for speed and throughput.

Who Lumo is for

Great fit: Individuals and teams who want mainstream AI utility without selling their data. Journalists, lawyers, founders, compliance-conscious teams, and privacy-first consumers will appreciate the defaults.

Good-enough privacy: Most users who simply don’t want their chats used for training or shared with ad networks will find Lumo’s model refreshing.

Consider alternatives: If your threat model requires that no third party ever decrypts your prompts—even temporarily—use a local LLM instead.

Verdict

If you’ve been waiting for an AI assistant that treats privacy as a first-class feature, Lumo is the most credible cloud-based option right now. Proton’s model isn’t magic—it can’t do the impossible with fully encrypted processing—but it pushes the state of practical AI privacy further than typical offerings, and it’s transparent about the limits. For most people and many businesses, that’s a meaningful upgrade.