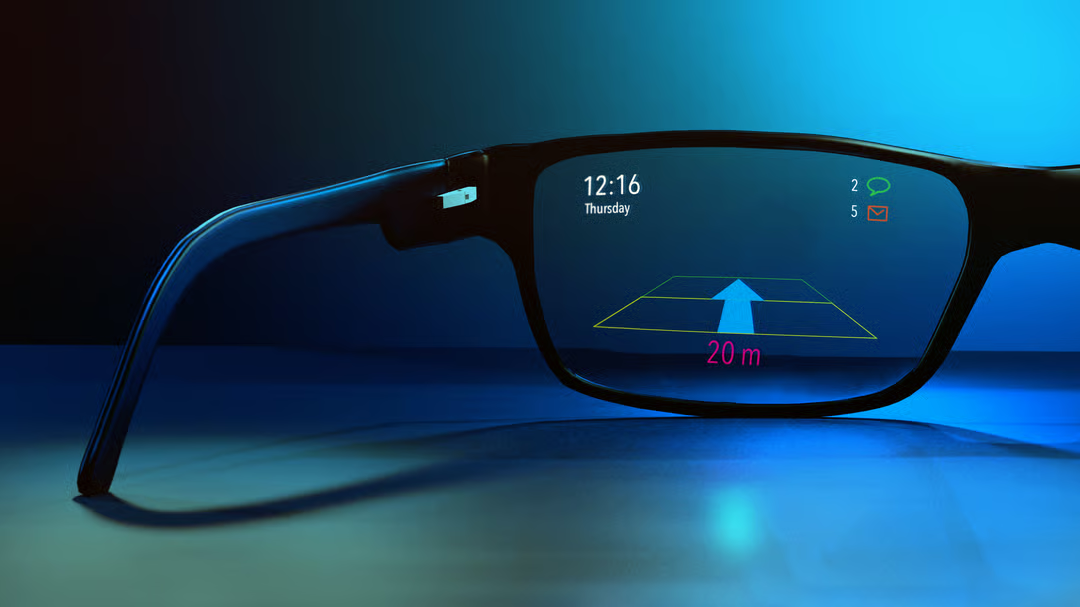

Ever wished restaurant reviews floated beside café doors as you strolled downtown? Strap on a pair of modern AR glasses and that sci-fi moment turns real in seconds.

The 30-Second Answer: How AR Glasses Work

- Sensors scan the world.

-

Processors crunch the data.

-

Displays create virtual pixels.

-

Optics beam those pixels into your eyes.

Done. Now let’s pop the hood.

What Exactly Are AR Glasses?

Augmented-reality (AR) glasses—often called smart glasses—overlay digital content onto the real scene you already see. They differ from VR headsets because they keep you in the real world instead of blocking it out. That’s the core AR vs VR technology difference most shoppers ask about.

Anatomy of an AR Glasses System

| Component | Purpose | Numbers to Watch |

|---|---|---|

| Micro-Display (OLED, μLED, LCoS) | Generates the virtual image | ≥ 1,000 nits brightness, > 90 Hz refresh |

| Waveguide / Combiner | Blends real and virtual light | 40–70° field-of-view, > 85 % transparency |

| Sensor Suite | Tracks head, hands, world | IMU @ 1 kHz, 6-axis gyro + cams + LiDAR |

| Processor (SoC) | Runs OS, SLAM, graphics | Snapdragon XR2, Apple R1, custom ASIC |

| Battery | Powers everything | 3–6 Wh, 45–120 min untethered |

| Connectivity | Data in & out | Wi-Fi 6E, BT 5.3, UWB, 5G |

| Input | Lets you command the UI | Voice mic, touchpad, eye-tracking, hand cams |

The Full Data Pipeline: From Reality to Retina

1. Sensing & Scanning

IMUs capture head motion while stereo cameras map the room in real time—a process called SLAM (Simultaneous Localization and Mapping). Depth sensors add millimeter-level distance data.

2. Edge Processing

A mobile SoC fuses sensor data, predicts your next head pose, and allocates GPU time. Latency budgets hover around 20 ms end-to-end. Miss that mark and virtual objects swim away.

3. Rendering

The graphics engine draws 3D models, then runs foveated rendering. Only the tiny region you look at gets full resolution. Eye-tracking chips decide where that is.

4. Optical Combining

A waveguide etched with diffraction gratings pipes photons from the micro-display to a transparent exit pupil floating before your eyes. The outside world still streams through the lens unchanged.

5. Dynamic Adjustment

Algorithms dim the display under bright sun or shift focus for people who wear prescription inserts.

Optical Architectures Explained

| Architecture | How It Works | Pros | Cons |

|---|---|---|---|

| Surface-relief Waveguide | Gratings bounce light along thin glass | Slim, light | Rainbow artifacts |

| Volume / Holographic Waveguide | 3D holograms steer light | Wide FOV | Lower brightness outdoors |

| Birdbath | Tiny mirror + lens combo | Cheap, bright | Bulky front module |

| Free-Space Combiner | Semi-mirror sits before eye | High clarity | Heavy, limited FOV |

“Optics decide whether the wow stays past the first demo.” — Dr. Bernard Kress, Microsoft HoloLens Lead Opticist

Tracking Tech That Glues Holograms in Place

-

6-axis IMU + camera SLAM keeps virtual furniture anchored to the floor.

-

Eye-tracking enables clickable menus without hand lifts.

-

Hand-pose recognition decodes pinches and swipes.

-

Beam-forming mics pick up “Hey Glasses, record.” over street noise.

Computing on Your Face: Chips, Heat & AI

Snapdragon’s XR2 stacks eight CPU cores with an Adreno GPU and a machine-learning engine that handles speech recognition locally. Apple’s R1 chip streams 12 cameras and LiDAR sensors with sub-12 ms motion-to-photon delay. Passive graphite heat spreaders wick warmth to the frame so lenses never fog.

Power & Connectivity

A 5 Wh pouch cell weighs about the same as two AAAs yet lasts an hour of full-blast mixed reality. Pro models add back-pocket battery packs, swapping weight off the nose bridge. Wi-Fi 6E pushes 6 Gbps of uncompressed video to edge servers so the headset can stay cool.

User Interfaces: Talking, Touching, Looking

| Input Method | When It Shines | When It Stumbles |

|---|---|---|

| Voice | Walking, gloved hands | Crowded subway privacy |

| Touchpad on Temple | Precise volume or page scroll | Winter gloves block it |

| Hand Gestures | Natural, satisfying | High CPU cost in low light |

| Eye Dwell / Blink Click | Hands busy, instant | Requires calibration |

| Phone Companion | Complex text entry | Breaks immersion |

A smooth product lets you switch among these without thinking.

AR Glasses vs VR Headsets vs Audio Smart Glasses

| Feature | AR Glasses | VR Headset | Audio-Only Glasses |

|---|---|---|---|

| Immersion | Medium | High | Low |

| Real-World Awareness | Full | None | Full |

| Typical Weight | 90–180 g | 400–600 g | 45 g |

| Use in Public | Socially acceptable | Awkward | Invisible |

| Price Band | $400 – $3,500 | $300 – $1,500 | $150 – $350 |

The table settles the AR vs VR technology difference debate at a glance.

Killer Use Cases Happening Right Now

-

Field technicians see wiring diagrams floating over turbines—Bosch Smart Service cut repair time 15 % in pilot studies.

-

Surgeons at Johns Hopkins pulled CT scans into their line of sight during spinal fusion, slashing fluoroscopy exposure by 64 %.

-

DHL warehouse pickers wearing Vuzix M300 displayed rack IDs and boosted item-pick speed by 25 %.

-

Tourists in Kyoto follow animated arrows to hidden ramen bars while live-translated menus hover beside the door.

Current Limitations & Engineering Challenges

-

Narrow Field of View – 50° feels like peering through swim goggles.

-

Outdoor Brightness – Noon sun still washes out displays.

-

Battery Life vs Weight – Double life equals double nose pressure.

-

Privacy Perception – Tiny cameras spark “Are you filming me?” pushback.

-

Vergence–Accommodation Conflict – Eyes focus at optical infinity while brain thinks a hologram sits on the desk which can cause eye strain after hours of use.

The Road Ahead

-

MicroLED panels already hit 10,000 nits in labs.

-

Electro-chromic lenses act like instant sunglasses outdoors.

-

5G edge rendering offloads heavy graphics to the cloud.

-

Holographic contact lenses from Mojo Vision promise a screenless future.

-

OpenXR and WebXR standards unify app stores so one build runs everywhere.

Buying Guide: How to Pick Your First Pair

-

Define the mission – Navigation, hands-free filming, or enterprise work?

-

Check the optics – Look for ≥ 1,500 nits if you plan to use them outside.

-

Feel the fit – IPD adjusters and nose pads matter more than specs.

-

Probe the ecosystem – Does it run native Android apps or a closed store?

-

Count the watts – Swap batteries if you need all-day shifts.

| Price Tier | Example Models | Notable Trade-Off |

|---|---|---|

| < $500 | Ray-Ban Meta, Xreal Air | Display tethered to phone |

| 500–1,200 | Rokid Max, Vuzix Blade 2 | Limited FOV |

| 1,200–3,500 | Microsoft HoloLens 2, Magic Leap 2 | Heavier, dev-focused |

Frequently Asked Questions on How AR Glasses Work

Do AR glasses harm eyesight?

No evidence shows retinal damage at normal brightness though extended sessions can cause dry eyes.

Can they fit over prescription lenses?

Most brands offer magnetic Rx inserts.

Will AR replace smartphones?

Not this decade. Battery density and social norms still favor phones for long sessions.

How private is the data?

Enterprise models encrypt sensor streams on device. Consumer units vary so always read the policy.

Conclusion: See the World Differently

Understanding how do AR glasses actually work turns the gadget from a magic trick into a toolbox. Sensors read your world, processors paint fresh data, and waveguides pipe that insight straight to your retina. Each advance—brighter microLEDs, faster edge AI, slimmer batteries—pushes the tech closer to everyday eyewear.

Ready to try a pair? Drop your questions below or subscribe for real-world reviews, discount alerts, and monthly updates into spatial computing. The future is in sight—literally.